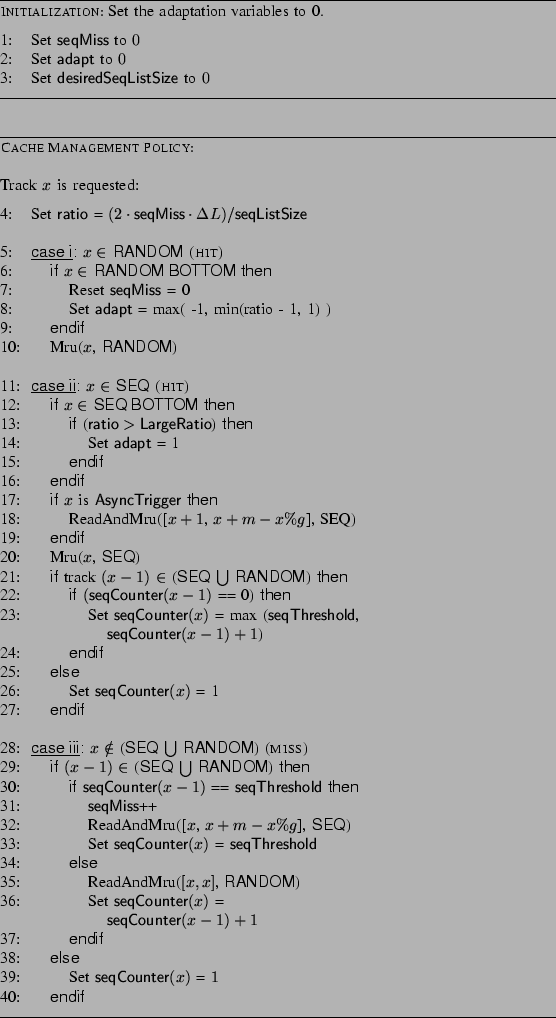

We now weave together the above analysis and insights into a concrete, adaptive (self-tuning) algorithm that dynamically balances the cache space allocated to RANDOM and SEQ data under different conditions such as different number of sequential/random streams, different data layout strategies, different think times of sequential/random streams, different cache-sensitivities/footprints of random streams, and different I/O intensities. The complete algorithm is displayed in Figure 4. SARC is extremely simple to implement and has virtually no computational/space overhead over an LRU-based implementation.

|

In our experiments, we have set ![]() to be

to be ![]() of the

cache space. Any other small fraction would work as well.

of the

cache space. Any other small fraction would work as well.

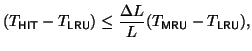

In the algorithm, we will need to determine if a hit in one of the

lists was actually in its bottom (see lines 6 and 12). Of course,

one could maintain separate fixed sized bottom lists for both the

lists. However, with an eye towards simplification and

computational efficiency, we now describe a time-based

approximation to achieve nearly the same effect. We now describe

how to determine if the a hit in SEQ lies in its bottom

![]() . Let

. Let

![]() and

and

![]() denote the time

stamp of the MRU and LRU tracks, respectively, in SEQ. Let

denote the time

stamp of the MRU and LRU tracks, respectively, in SEQ. Let ![]() denote the size of SEQ in pages. Let

denote the size of SEQ in pages. Let

![]() denote the time stamp of the hit track. Now, if

denote the time stamp of the hit track. Now, if

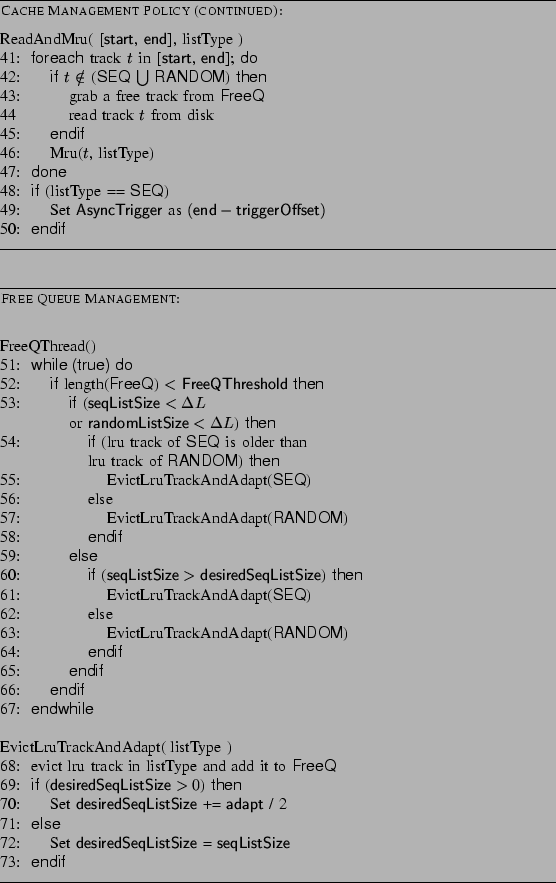

The algorithm uses the following constants: ![]() (lines 18 and 32)

denotes the degree of synchronous and asynchronous read-ahead;

(lines 18 and 32)

denotes the degree of synchronous and asynchronous read-ahead; ![]() (lines 18 and 32) denotes the number of disks in a RAID group;

(lines 18 and 32) denotes the number of disks in a RAID group;

![]() (line 49) is the offset from the end of a

prefetched group of tracks and is used to demarcate the

asynchronous trigger; LargeRatio (line 13) is a threshold,

say,

(line 49) is the offset from the end of a

prefetched group of tracks and is used to demarcate the

asynchronous trigger; LargeRatio (line 13) is a threshold,

say, ![]() , to indicate that the ratio has become much larger

than

, to indicate that the ratio has become much larger

than ![]() ; and FreeQThreshold (line 52) denotes the desired

size of the FreeQ. Shark uses RAID arrays (either RAID-5 or

RAID-10) at the back-end. Our machine (see

Section IV-B) was configured with RAID-5 (6 data disks

+ parity disk + spare disk) meaning that

; and FreeQThreshold (line 52) denotes the desired

size of the FreeQ. Shark uses RAID arrays (either RAID-5 or

RAID-10) at the back-end. Our machine (see

Section IV-B) was configured with RAID-5 (6 data disks

+ parity disk + spare disk) meaning that ![]() . RAID allows for

parallel reads since logical data is striped across the physical

data disks. To leverage this, setting

. RAID allows for

parallel reads since logical data is striped across the physical

data disks. To leverage this, setting ![]() as a multiple of

as a multiple of ![]() is

meaningful. We set

is

meaningful. We set ![]() . Finally, we chose

. Finally, we chose

![]() to be

to be ![]() .

.

The algorithm is self-explanatory. We now briefly explain important portions of the algorithm in Figure 4 in a line-by-line fashion.

Lines 1-3 are used during the initialization phase only. The

counter

![]() tracks the number of sequential misses

between two consecutive bottom hits in RANDOM, and is

initialized to zero. The variable

tracks the number of sequential misses

between two consecutive bottom hits in RANDOM, and is

initialized to zero. The variable

![]() is

the desired size of SEQ, and is initially set to zero

meaning adaptation is shut off. The adaptation will start only

after SEQ is populated (see lines 69-73). The variable

is

the desired size of SEQ, and is initially set to zero

meaning adaptation is shut off. The adaptation will start only

after SEQ is populated (see lines 69-73). The variable

![]() determines the instantaneous magnitude and direction of

the adaptation to

determines the instantaneous magnitude and direction of

the adaptation to

![]() .

.

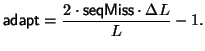

Lines 4-50 describe the cache management policy. The quantity

![]() in line 4 is derived from (6). Lines

5-10 deal with the case when a track in RANDOM is hit. If

the hit is in the bottom portion of the RANDOM list (line

6), then

in line 4 is derived from (6). Lines

5-10 deal with the case when a track in RANDOM is hit. If

the hit is in the bottom portion of the RANDOM list (line

6), then

![]() is reset to zero (line 7) since we are

interested in number of sequential misses between two successive

hits in the bottom of RANDOM. Line 8, sets the variable

is reset to zero (line 7) since we are

interested in number of sequential misses between two successive

hits in the bottom of RANDOM. Line 8, sets the variable

Lines 11-27 deal with the case when a track in SEQ is hit.

If the hit is in the bottom portion of the SEQ list (line

12) and

![]() has become large (line 13), in other words,

no hit has been observed in the bottom of the RANDOM list

while comparatively large number of sequential misses have been

seen on the SEQ list, then set

has become large (line 13), in other words,

no hit has been observed in the bottom of the RANDOM list

while comparatively large number of sequential misses have been

seen on the SEQ list, then set

![]() to

to ![]() (line

14) meaning that increase

(line

14) meaning that increase

![]() at the

fastest rate possible. Now, if the hit track is an asynchronous

trigger track (line 17), then asynchronously read-ahead the next

sequential group of tracks (line 18). Lines 21-27 describe how the

sequential detection mechanism in Section II-A is

implemented.

at the

fastest rate possible. Now, if the hit track is an asynchronous

trigger track (line 17), then asynchronously read-ahead the next

sequential group of tracks (line 18). Lines 21-27 describe how the

sequential detection mechanism in Section II-A is

implemented.

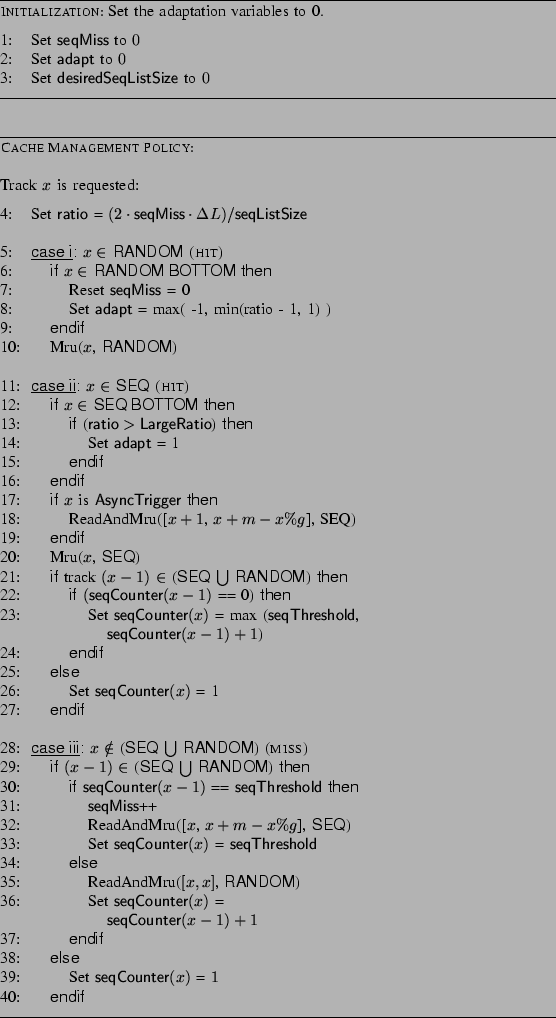

Lines 28-40 deal with a cache miss. For a sequential miss (lines 29-31), synchronously read-ahead a sequential group of tracks (line 32). The remaining lines deal with sequential detection mechanism in Section II-A.

Lines 41-50 (i) read the missing tracks from a given range of tracks; (ii) places all tracks in the given range at the MRU position; and (iii) set the asynchronous trigger.

Lines 51-73 implement the cache replacement policy and carry out

the adaptation. As is typical in multi-threaded systems, we assume

that these lines run on a separate thread (line 51). If the size

of the free queue drops below some predetermined threshold (line

52), then tracks are evicted from SEQ if it exceeds

![]() and tracks are evicted from RANDOM

otherwise. In either case, the evicted tracks are placed on the

free queue. Observe that SARC becomes active (lines 60-64)

only if the sizes of both the SEQ and the RANDOM list

exceed

and tracks are evicted from RANDOM

otherwise. In either case, the evicted tracks are placed on the

free queue. Observe that SARC becomes active (lines 60-64)

only if the sizes of both the SEQ and the RANDOM list

exceed ![]() . Otherwise, a simple LRU eviction (lines

54-58) is done. Whenever the utility of one of the two lists

becomes so small when compared to the utility of the other list

that its size eventually drops below

. Otherwise, a simple LRU eviction (lines

54-58) is done. Whenever the utility of one of the two lists

becomes so small when compared to the utility of the other list

that its size eventually drops below ![]() , we do not want to

waste even

, we do not want to

waste even ![]() amount of cache, and revert back to LRU. Whenever both list sizes exceed

amount of cache, and revert back to LRU. Whenever both list sizes exceed ![]() , SARC takes

over. Finally, lines 68-73 evict the LRU track from the

desired list, and effect an adaption as already described above.

, SARC takes

over. Finally, lines 68-73 evict the LRU track from the

desired list, and effect an adaption as already described above.

Our description of SARC is now complete.