|

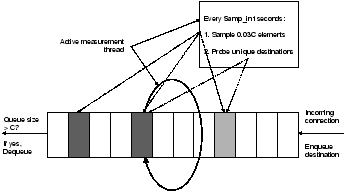

Similar to passive measurement, the active measurement scheme also maintains a hash table of the performance estimates to candidate destinations over the three providers. For active measurement, we use two techniques to identify which destinations should be monitored.

FrequencyCounts. Just like the passive measurement mechanism, in this scheme we track the number of client requests directed to each destination. Every

SlidingWindow. This scheme maintains a window of size

The two active measurements schemes have their respective advantages and disadvantages. Notice that both the schemes effectively sample the performance to destinations that are accessed more often relative to others. However, there are a few key differences. First, FrequencyCounts is deterministic since it works with a reasonably precise set of the top destinations by popularity. SlidingWindow, on the other hand, may either miss a few popular destinations, or sample a few unpopular destinations. Second, FrequencyCounts in its simplest form, cannot easily track small, short-term shifts in the popularity of the destinations. These new, temporarily-popular destinations may not receive enough requests to exceed the threshold and force performance sampling for them, even though they are popular for a short time. SlidingWindow can effectively track small shifts in the underlying popularity distribution of the destinations and try to optimize performance to such temporarily popular destinations.

Probe operation. Once a destination is selected for active probing, the active measurement scheme sends three probes, with different source IP addresses, corresponding to the three providers and waits for the destination to respond. Since we found that a large fraction of popular Web sites filter ICMP ECHO_REQUEST packets, we employ a TCP-based probing mechanism. Specifically, we send a TCP SYN packet with the ACK bit set to port 80 and wait for an RST packet from the destination. We use the elapsed time as a sample of the turn-around time performance. We found that most sites respond promptly to the SYN+ACK packets.

When a response is received, we update the performance estimates to the destination for the corresponding provider, along with the measurement timestamp. As described above, we update the performance estimate using the EWMA computation. If no response is received from a destination (which has an entry in the performance hash table), then a large positive value is used as the current measurement sample of the performance, and the performance is updated accordingly.