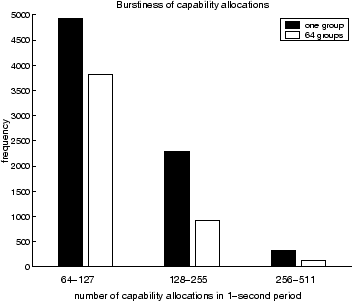

Is the capability group method beneficial in practice? In

particular, do capability groups reduce the burstiness of capability

allocations, thus reducing the chance of the metadata server being

overloaded? To answer this question, we simulated the behavior of

secure NADs using the trace of a 500 GB file system used by about 20

researchers over 10 days [21]. The results are shown in

Figure 7, which is a histogram of how many capability

allocations were performed by the metadata server in each 1-second

period. The amount of memory for capability storage was fixed at 64 KB.

The black bars show results for the straightforward method of

Section 2.2, which uses a single bitmap to store all

capabilities; this is precisely equivalent to the capability group

method, with the number of groups ![]() . The white bars are for a

configuration using the same amount of memory, but divided into

. The white bars are for a

configuration using the same amount of memory, but divided into ![]() groups.

groups.

Note that for this particular workload, the metadata server could hardly be described as ``overloaded'': the peak load of around 500 capabilities/second can easily be handled since it takes less than 2 ms to issue a capability. Nevertheless, these results demonstrate that the capability group approach substantially reduces burstiness. If the stress on the server were greater (e.g., if it served more disks), the capability group approach would improve performance by reducing periods of overload.

|