Next: data blocking.

Up: 4. Memory bandwidth and

Previous: false sharing.

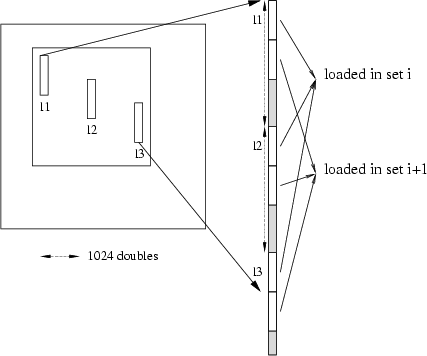

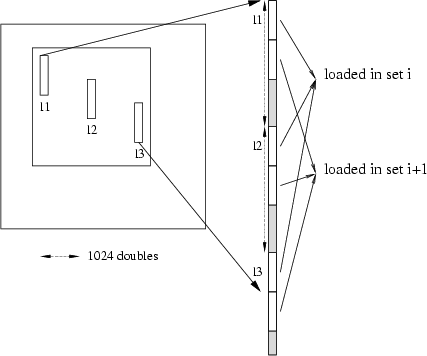

Mutual exclusion appends when 2 or more memory lines are needed but cannot be

in cache all-together because they fit in the same cache line and successive

access cause exclusion of the memory lines previously loaded.

This is a real problem for direct mapped caches where a memory line can be in

only one cache line. N way set associative caches solve this problem by

allowing a memory line to be in N different cache lines, the N locations

are called a set; this is the case of the P6 processors where L1 cache is 2 way set associative and it avoids mutual

exclusion for all Level 1 BLAS with less than 3 vector operands (like ddot and

daxpy). Mutual exclusion can also appends for matrix operations like dgemm:

when using a block method, leading dimension12can be such that some memory lines share the same set into cache avoiding more

than N of them at the same time: on figure ![[*]](cross_ref_motif.png) the

memory lines l1 l2 and l3 are 1024 doubles spaced (remember that PII L1 cache

has 256 sets each containing 2 line of 32 bytes) so they fit into the same L1

cache set which can hold only 2 different memory lines. Thus l1, l2 and l3

and subsequent lines exclude mutually. The solution to have the block in cache

is to make a copy into contiguous memory and this is the solution adopted in

ATLAS.

the

memory lines l1 l2 and l3 are 1024 doubles spaced (remember that PII L1 cache

has 256 sets each containing 2 line of 32 bytes) so they fit into the same L1

cache set which can hold only 2 different memory lines. Thus l1, l2 and l3

and subsequent lines exclude mutually. The solution to have the block in cache

is to make a copy into contiguous memory and this is the solution adopted in

ATLAS.

Figure:

Mutual exclusion in block access.

|

Next: data blocking.

Up: 4. Memory bandwidth and

Previous: false sharing.

Thomas Guignon

2000-08-24

![[*]](cross_ref_motif.png) the

memory lines l1 l2 and l3 are 1024 doubles spaced (remember that PII L1 cache

has 256 sets each containing 2 line of 32 bytes) so they fit into the same L1

cache set which can hold only 2 different memory lines. Thus l1, l2 and l3

and subsequent lines exclude mutually. The solution to have the block in cache

is to make a copy into contiguous memory and this is the solution adopted in

ATLAS.

the

memory lines l1 l2 and l3 are 1024 doubles spaced (remember that PII L1 cache

has 256 sets each containing 2 line of 32 bytes) so they fit into the same L1

cache set which can hold only 2 different memory lines. Thus l1, l2 and l3

and subsequent lines exclude mutually. The solution to have the block in cache

is to make a copy into contiguous memory and this is the solution adopted in

ATLAS.