A Framework for Fine

grained Data Integration and Curation,

with Provenance, in a Dataspace

David W. Archer, Lois M. L. Delcambre, David Maier

Department of Computer Science, Portland State University

Portland, OR 97207 USA

{darcher, lmd, maier @cs.pdx.e

Abstract

Some tasks in a dataspace (a loose collection of heterogeneous data sources) require integration of fine

grained data

from diverse sources. This work is often done by end users knowledgeable about the domain, who copy

paste

preadsheet or other existing application. Inspired by this kind of work, in this paper we define a

ration setting characterized by data that are explicitly selected, copied, and then pasted into a target dataset where

they can be confirmed or replaced. Rows and columns in the target may also be combined, for example, when r

dant. Each of these actions is an integration decision, often of high quality, that when taken together comprise the

provenance of a data value in the ta

get. In this paper, we define a conceptual model for data and provenance for

these user actions, and we show how questions about data provenance can be answered. We note that our model

can be used in automated data curation as well as in a setting with the manual a

tivity we emphasize in our examples.

Introduction

When a user is confronted with a potentially large

number of diverse data sources of variable maturity or

quality, i.e., a data

pace [1], she may have limited tools

available to integrate or query data from these sources.

A user typically uses a weak tool such as a sprea

sheet to gather data and almost always uses a copy

paste action to transfer data from sources into an

integrated target dataset. This kind of work differs su

ation integration, i

date exchange, as discussed in the literature

[2,3,4,5], though aspects of it have been a

dressed in

literature concerning curated dat

bases [10,11,18,19].

Perhaps the most compelling diffe

ences between this

traditional integration are that the

user works with individual data values, rather than sets

of rows at a time, and that provenance is needed at the

level of these individual values. These differences and

others we di

cuss in Section 2 lead us to describe and

address a data curation se

As an example, consider Anne, a battalion inform

tion officer working in a theatre of military ope

ation.

Anne gathers and organizes information from a v

of sources to help her commanders make d

cisions. A

semble a table of

casualty information due to e

plosive device incidents

sources for asse

bling this report may include incident

databases from friendly forces in the area, e

changes with local police, pa

rol logs for the week, and

medical

team records. Anne uses her personal know

edge of the operations area, recent events, reliability of

sources, and her commander’s prefe

ences for data as

she works. She may begin gathering data by writing a

query against an existing database, as in traditional

information integration. However, she then proceeds

traditional lines: adding rows to her table as

she identifies incidents of interest; s

lecting data va

rnal sources and inserting them into rows

and columns in the evolving data table by using copy

needed; manually overriding data values directly or

with data from other sources; merging rows that she

resent the same incident into new rows (e

tity resol

tion), and choosing correct values for each

column in the resul

ing row from those being merged;

combining information from multiple co

umns that she

realizes are redundant (attribute resolution), a

ing correct values for each row in the resu

ting column

from those being merged; confirming data elements she

knows to be accurate; correcting mistakes; and issuing

further updates.

Each of Anne’s actions is an information integration

each data value in her report is the pro

uct of such decisions. This provenance is often lost

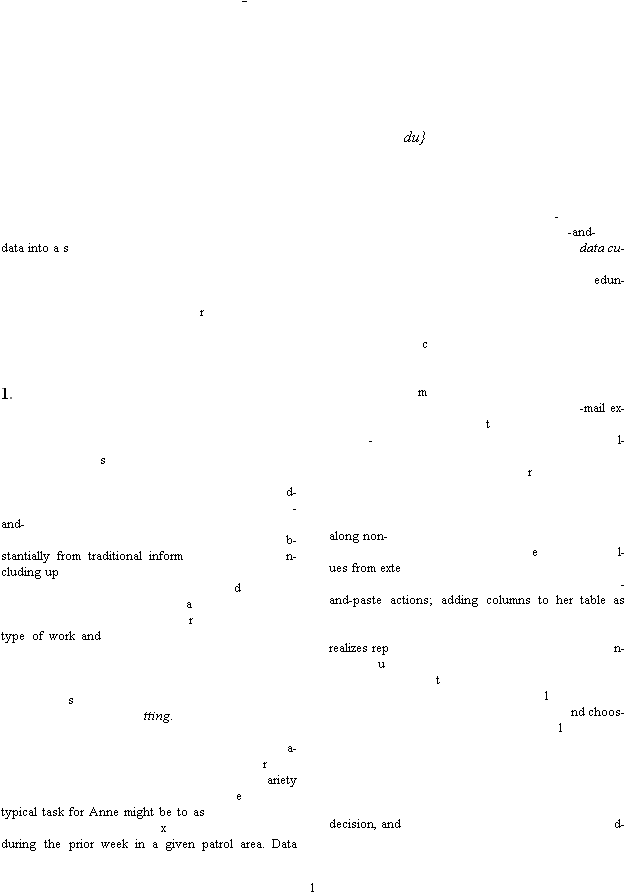

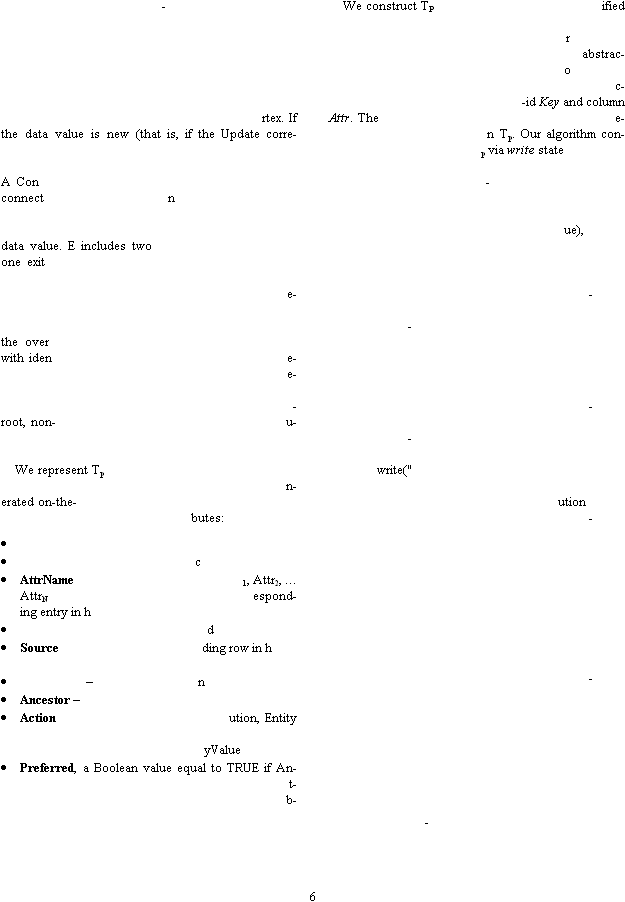

![2 such as, “Where did we get the date for in105?”and i-buoe-tion set-dea-plutting. ation, -plus-autotting. othe ernized obe a conr-ma-c--and-unspr-concludes. 2. tting a-(and in the prototype implementation of our data t-ing a datab-utes); copying data values from external information dper-have not included here the notable, as Buneman et al. do [10,11]. Suppose Anne fol IncidID Mapl1 Mapl2 T Dev 101 34.3998 70.4986 ied 102 2 art 103 34.3998 70.4985 1 Here, IncidID is Anne’s identifier for incidents, n-specified attribute, and Dev is the type of munition -story ng copy-and-on casualcolfully un n, on an attrib-ute-by-nn-ventity. She then resolves incidents 102 and 104. h ID Mapl1 Mapl2 T Dev Cas ME MLat MLng Date 101 34.3998 70.4986 1 ied 3 3 3423.99 7029.92 03mar 102 34.3996 70.4985 2 art 7 6 3423.96 7029.90 05mar 103 34.3998 70.4985 1 ied 4 3 3423.99 7029.91 03mar 104 34.3996 70.4985 2 msl 7 6 3423.96 7029.90 04mar 105 34.3994 70.4988 1 ied 12 12 3423.94 7029.88 06mar -and-](./background2.png)

with current tools, but is key to answering questions

cident

We seek to enable this kind of data integration

provenance exploration with minimal interference

with a user’s workflow. We make the following contr

tions toward our goal in this paper. We elab

rate the

example above in order to describe the differences b

tween this and traditional information integra

tings. We extend the set of user integration actions

fined in our prior work [6,7] to include user confirm

tion, so that a user can express confidence in a data

value, even if the value is not changing. We show how

to derive from a record of user actions the provenance,

rality of support, and trust of each data value in the

assembled data set, and how to use this information to

answer questions that arise in our se

Although our work was inspired by manual cur

it is applicable to an automated or mixed manual

matic setting. A user can copy data in bulk from a

query answer or view into the data curation se

Whether manual or aut

mated, we only require that the

“paste” part of the action specify precisely which cell is

target for each value. In this paper, we d

scribe the

actions in a data curation setting as if a user pe

formed

them manually. The remainder of our paper is orga

as follows. In Section 2, we elab

rate our example of a

data curation setting and descri

ceptual model

to support this setting, including the new user confi

mation action. In Section 3, we describe an i

plement

tion approach to support our conceptual model. In Se

tion 4, we relate copy

paste, entity resolution, and

attribute resol

tion operations to data prove

ance. In

Section 5, we show how our model is able to answer

interesting que

tions about provenance. In Section 6,

we evaluate our a

proach with regard to storage ove

head. Section 7 discusses related work and Section 8

Use Model for a Data Curation Se

We assume that Anne uses a tool for data integr

tion that records each of her actions. In our example

model), Anne’s actions may include: initially popula

table via a query over an external source;

adding or deleting rows (entities) and columns (attri

sources and pasting them into rows and columns of the

table (either updating or ad

ing information); and

forming entity and attribute resolution operations. We

tion of copying a value

from within a data table and pasting it elsewhere in the

writes a query to gather some initial data, with result as

lows:

Mapl1 and Mapl2 are map coordinates, T is some u

involved. Implicit in this table (and not visible to the

user), is a unique, system

generated key for each row in

the table, used by the system for recording the hi

of data manipulation in the row. Next, Anne gathers

more data usi

paste, and finds information

ties and dates for the incidents (adding new

columns Cas and Date), along with other data (new

umns ME, MLat, and MLng), which she does not

derstand yet. Her data table now is shown in

Table 1.

At this point, Anne determines that incidents 101

and 103 are the same, and that i

cidents 102 and 104 are

the same. Selecting “entity resolution” from a menu,

she creates a new entity from incidents 101 and 103,

and as part of the operation she selects

attribute basis, which values to propagate from

the original i

cidents into the new (merged) row whe

ever the source rows disagree. The original rows are

then hidden from Anne’s view, lea

ing only the new

Choices made during the resolutions are hig

lighted in

Table 2.

Table 1. Intermediate data table after first round of copy

paste integration.

Table 2. Data table after initial entity resolution.

Next, Anne finds that columns Mapl1 and Mlat are

the same geographical location data, though in diffe

ent formats. Similarly, Mapl2 and MLng are redundant.

(which she discovers to mean the count of soldiers

MedEvac’d from the scene) also represent the same

information, which she chooses to call Injured. Resol

ing these columns proceeds in fashion similar to the

entity resolution operations. Anne chooses new, mea

ingful column names for the new columns, and her t

Review of the data against other information reveals

inconsistencies. She changes the “I

jured” column of

nt 105 to 9 from 12, and the date of incident 105 to

07mar. From personal kno

ledge, she confirms the “i

jured” column of incident 104 as 6. At last, her r

port is

in final form:

From the point at which Anne began gathering data

to the point at which the report is in final form, 95 r

cordable history actions occurred. At this point, Anne

might need to examine the history behind selected data.

She might for example ask the following que

tions:

“Which incidents were co

bined from others in the

dents in the table had casualty data

that was i

consistent and was corrected?”

“For each incident merged from redundant inc

dents, what were the incident IDs of the original

rows that we chose to resolve?”

“How many i

formation sources have we found to

support the casualty count for the IED incident in

“What other names did co

umn Long in this table

ve in earlier versions of the data gathered?”

“What information about incident 109, if any, was

derived differently than the rest?”

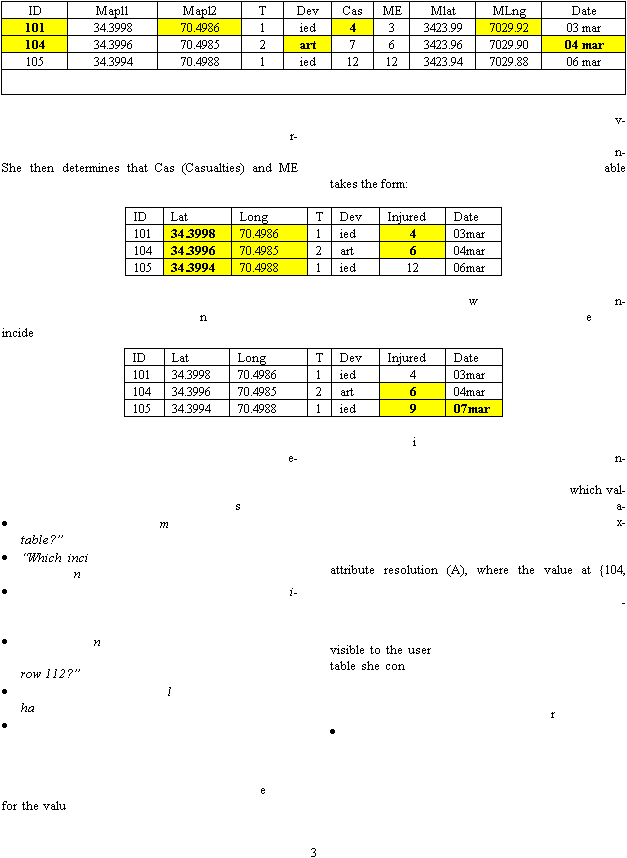

To answer these, Anne can click on a data value in her

table and be presented with a graph of the prov

nance

e, as shown in Figure 1. This graph shows

not only the or

gins (external sources) for the selected

data value, but also the intermediate values that co

tributed to its current state. All user actions that led to

the current state are also shown, including

ues were preferred over others during resolution oper

tions, as well as confirmations made by users. For e

ample, the figure shows that the value for incident 104

in the Injured column was most recently derived by

wounded} in the table was preferred by the user over

the value at {104, cas} (the dashed edge indicates non

preferred). The figure also shows that the current value

was confirmed by user input (X). The conceptual model

in our setting consists of the data

structed, along with a provenance graph

for each “cell” in the table.

This setting has a number of novel cha

acteristics:

Access to the collection of data sources found in a

dataspace may be varied. For example, sources may

come and go over time. In addition, we may wish to

produce a snapshot in time of a dataspace that is

dynamic. Because of this requirement, queries are

answered using only the target i

stance.

sources. However, trad

tional integration may also

play a role.

A data value (once selected) is inserted into a sp

cific location in the target instance, identified by an

mn name (disti

guished by means of the user pointing to a table

cell). The user is implicitly joi

ing each new value

with a row that already exists and specifying a pa

ticular existing column into which the data value

goes.

Provenance tree for (104,“Injured”) where the

current value is 6. Edges represent user actions (A for

attribute resolution, E for entity resolution,

firmation,

for update). Nodes show state of va

in the data table. Non

preferred edges are shown u

ing a dashed

The target instance may include new data i

serted

from the user’s knowledge and not from an ident

fied data source.

The user can confirm that a data value in the ta

is valid, either by pasting in the same value from a

second source, or by locally confirming the value.

A data value gathered by a user may arise from mu

judgements, have multiple attribute name design

tions, and have multiple entity associations during

want to review the reliability of her data, understand

how much support there is for a given data value,

and possibly review the set of values that a given

data value has had over time.

An Implementation Approach

user application

called CHIME (Capturing Human Intension Metadata

with Entities) that supports the kind of integration task

outlined above. CHIME supports creation and manip

lation of a single, entity

centric data table, while ca

ipulation operations (inse

tions

and updates, entity resolutions, attribute resol

tions,

and confirmations) that comprise the pro

enance of

data in the table. Rows in this table correspond to e

tity instances, while columns corre

pond to attributes

entities. (In this paper, we use the term “e

to refer to an entity instance.) Each entity in a CHIME

dataset (i.e., the data shown in one row) has an inte

nally generated, unique identifier, called Ke

Val in the

schema, which is not made visible to the user.

CHIME data can be modelled by two relations. We

define schema R with instance r to represent ent

generated identifier that

functions as a cand

date key for R

Col.Visibl

}, where each Attr is a user

tribute name and each Col.Visible a Boolean that

specifies whether Attr is visible to the user and el

gible for CHIME operations.

whether this tuple in r is visible to the user and el

gible for CHIME operations.

We define a history table schema H, with instance h.

Each user action on the data relation r adds new entries

to h. H has a

tributes:

SeqNum, a monotonic integer (e.g., a timestamp)

KeyVal, a foreign key refer

AttrName, an attribute with domain {Attr

AttrVal, the value given to attribute AttrName in the

row in r with key KeyVal as a result of this a

Action, an attribute with domain {A, E,

, X} (for

attribute resolution, entity resolution, update, and

user confirmation, respectively)

Preferred, an attribute with domain {Left, Right,

Both} that indicates which parent tuple or column

ferred for item (KeyVal, AttrName) by the

user during a resolution operation

LeftParent,

{dom(AttrName)

dom(KeyVal)

NULL}, ind

ing one parent row (for entity resolution) or co

(for attribute resolution), or NULL otherwise

{dom(AttrName)

dom(KeyVal)

ing the other of two parents resolved to form the

new data value

valued attribute with domain co

sisting of the distinguished value “User

supplied”

and names of data sources

Taken together, Seqnum, KeyVal, and AttrName

form a candidate key for H. Note that multiple entries in

y have the same SeqNum. For example, all entries

associated with a resolution operation have the same

timestamp, though each entry affects a different target

data value.

We envision a user interface (much like our CHIME

prototype interface) where data in r is shown in table

form, with only rows and columns with Visible flags set

to True visible to the user. In the interface, the user can

easily see the corresponding data from h in the form of

a provenance graph for any data value shown, e.g. by

clicking on the data value.

Relating User Actions to Prov

tion from CHIME actions to fields of

entries in the CHIME history table (h) in order to co

enance and answer user questions. Copy

paste actions that insert new (or different) values

into a data value are mapped to entries in h as fo

lows:

SeqNum = (automatically generated) sequence

number of this update in the overall history

KeyVal = internal identifier of target row

AttrName = name of target co

AttrVal = value pasted

on = Update

Preferred = NULL

LeftParent = NULL

RightParent = NULL

Source = source identifier or “User

Users can copy

paste the same value into a data

value again, possibly from a different source. In this

case, we add an entry to the history table, r

cording the

user action as Confirm. The user may also Co

paste operation,

when there is no exte

nal source.

Entity resolution in our model consists of merging

two entities into a new entity, then making the original

entities not visible. For each attribute (column) of the

sulting entity where source data values differ (note

that the schema of source entities and result entity are

the same), the user must choose which source a

tribute

agate. When source attribute values are

ion. Two attributes are

merged into a new attribute, and the two original co

source values differ, the user chooses column values to

propagate to the new co

umn. We map entity resolution

erations to entries in h as

follows:

number of this resolution in the overall history. (B

cause resolutions affect multiple data values, and it

is useful to identify all effects of a resolution, all

such effect are r

corded with one SeqNum)

KeyVal = internal identifier for row of the data val

resulting from entity or a

tribute resolution

AttrName = column name of the data value resul

tity or attribute resolution

AttrVal = value of the data value after resolution

Action = Entity or Attribute resolution

Preferred = Left, Right, or Both referring to one or

both of the Parent values below

LeftParent = one of the KeyVals (if entity resol

tion) or column names (if attribute resolution) i

volved in entity or attribute resolution

RightParent = the other KeyVal or column name

volved in entity or attribute resolution

Source = NULL

Computing and Expressing Provenance

In this section we explore the use of the history rel

tion to generate provenance graphs. We then d

graphs in order to answer user que

tions.

We define a provenance tree

(V,E) for a data value

in the user’s table as a directed acyclic graph with V a

set of vert

ces and E a set of edges. A vertex v

corresponds to

the current state of the data value of interest, if the

vertex is the root of T

a prior state (ancestor) of the data value of interest,

if the vertex is neither the root nor a leaf in the tree

the source of an update or confirmation, if the ve

tex is a leaf in the tree (either the name of an exte

source, or a constant user

supplied value when the

user confirms the value directly)

A provenance graph represents an Update operation

by adding a leaf vertex to represent the source from

which the data was copied (or to represent a constant

supplied directly by the user), and an Update edge from

the source vertex to the affected data value ve

sponds to insertion of a data value for the first time), a

vertex is added to represent the data value in the table.

firm operation is represented similarly, but the

ing edge is labelled Co

firm. If two data values

are merged during an entity or attribute resolution, a

new vertex is added to represent the resulting merged

edges for the operations,

ing each vertex representing a “parent” data

value and both entering the vertex representing the

new (merged) data value. Where the two values r

solved are not equal, each edge indicates whether the

parent vertex held the value preferred by the user, or

ridden (not preferred) value. In a resolution

tical parent data values, both edges are pr

ferred. In the absence of resolution operations, a prov

nance graph for a data value consists only of a root

vertex and any leaf (source) vertices. Intermediate (non

leaf) vertices are only introduced by resol

tions.

(V,E) for one data value as a pair of

relations, Node and Edge, that are temporary, and ge

fly when the provenance graph is created

by user request. Node includes attri

Nodenum, a candidate key for Node

KeyValue, an foreign key referen

ing KeyVal in H

, an attribute with domain {Attr

} from R, equal to AttrName in the corr

AttrVal, = AttrVal in the correspon

ing entry in h

, = Source in the correspon

The attributes for Edge are:

Descendant

a foreign key refere

cing Node

a foreign key referencing Node

, with domain {Attribute Resol

Resolution, Update, Confirm} equal to Action in the

entry in h for the Descendant’s Ke

cestor’s value was preferred during the action crea

ing Descendant, and FALSE otherwise. This attri

ute is ignored if Action is not a resolution action

(V,E) for a data value in r (spec

by the user selecting a row and column) by retrieving

from the history table the list of actions pe

formed on

the value and its ancestors. Figure 2 shows an

tion of the Prolog implementation of our alg

rithm. The

get_history predicate retrieves from h a list of user a

tions performed on the value at row

setpref predicate sets the value of the “Pr

ferred” indicator for edges i

structs a textual version of T

ments.

make_ptree(Key, Attr, Root) :

get_history(Key, Attr, [H|Rest]),

history(H,_,_,Val,_,_,_,_,Src),

build_ptree(Key, Attr, H, [H|Rest], tr

write("node(",H,Key,Attr,Val,Src,")."),

Root is H.

% most recent action was an update

build_ptree(Key, Attr, Root, [H|Rest], Pref_flag) :

history(H,_,_,Val,u,_,_,_,Src),

S is 0

Root,

write("node(",S,Key,Attr,Val,Src,")."),

write("edge(",Root,S,u,Pref_flag,")."),

build_ptree(Key, Attr, Root, Rest, false).

% most recent action was a confirmation

build_ptree(Key, Attr, Root, [H|Rest], Pref_flag) :

history(H,_,_,Val,c,_,_,_,Src),

S is 0

Root,

write("node(",S,Key,Attr,Val,Src,")."),

edge(",Root,S,u,Pref_flag,")."

build_ptree(Key,Attr,Root,Rest,Pref_flag).

% most recent action was an attribute resol

build_ptree(Key, Attr, Root, [H|Rest], Pref_flag) :

history(H,_,_,_,a,Pref, LeftP, RightP, _),

make_ptree(Key, LeftP, NewRoot1),

setpref(Pref_flag, Pref, left, P),

write("edge(",Root,NewRoot1, a, P,")."),

make_ptree(Key, RightP, NewRoot2),

setpref(Pref_flag, Pref, right, Q),

write("edge(",Root,NewRoot2, a, Q,")."),

build_ptree(Key, Attr, Root, Rest, false),!.

% most recent action was an entity resolution

build_ptree(Key, Attr, Root, [H|Rest], Pref_flag) :

history(H,_,_,_,e,Pref, LeftP, RightP, _),

make_ptree(LeftP, Attr, NewRoot1),

setpref(Pref_flag, Pref, left, P),

write("edge(",Root,NewRoot1,e,P,")."),

make_ptree(RightP, Attr, NewRoot2),

setpref(Pref_flag, Pref, right, Q),

write("edge(",Root,NewRoot2,e,Q,")."),

build_ptree(Key, Attr, Root, Rest, false),!.

% base condition

empty history list

build_ptree(_,_,_,[],_).

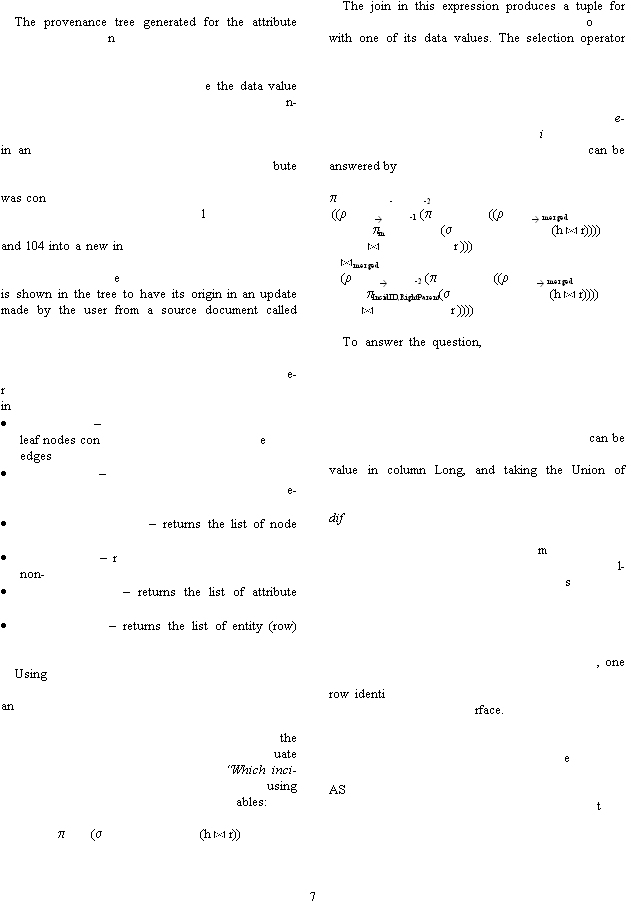

Figure 2. Provenance tree construction algorithm

value “Injured” for i

cident 104 in the final data table of

Section 2 is shown in Figure 1. Nodes are labelled for

clarity in this example with a row key (IncidID in this

case) and a column name to indicat

represented. The tree shows that the data value of i

terest has value 6, which was inherited from ancestor

{104, wounded} and preferred over an alternate value

cestor {104, cas} during attribute resolution of

attributes “cas” and “wounded” into the new attri

named “injured”. The tree also shows that this value

firmed by user input. The value at {104, cas} is

shown to be inherited from earlier va

ues at {102, cas}

and {104, cas} during entity resolution of incidents 102

cident ID that the user chose to

also call 104. In this case, both values were the same,

so both edges are pr

ferred. The value from {102, cas}

“document 3”.

We have defined a set of predicates over the Node

and Edge tables to assist in answering common qu

ies. We omit their Datalog definitions and define them

formally here:

view_origins

returns the list of node numbers of

nected to the root node by pr

ferred

view_sources

returns the names of data sources

from all leaf nodes connected to the root by pr

ferred edges

view_all_predecessors

numbers of all leaf nodes in the tree

view_support

eturns a count of the number of

root nodes with the same data value as the root

view_attribnames

names found in the tree nodes

view_entityIDs

identifiers found in the tree nodes

these predicates, relational algebra, and the

provenance graph for a selected data value, we can

swer the kind of questions described in Section 2. To

answer the question, “How did we find out the date of

incident 105?” we build the provenance graph for

“Date” column of the row for incident 105, and eval

the query view_sources(). We answer,

dents were combined from others in the table?”

relational algebra over the history and data t

IncidID

Row.Visible=True,Action=E

every entity that has a history table entry ass

ciated

chooses those where the history table entry represents

an entity resolution, and where the entity is still visible

to the user.

The question, “For each incident merged from r

dundant incidents, what were the inc

dent IDs of the

two predecessor rows we chose to resolve?”

merged, original

1,original

incidID

original

merged, IncidID

incidID

(

cidID,LeftParent

Row.Visible=True, Action=E

LeftParent = KeyVal

incidID

original

merged, IncidID

incidID

(

Row.Visible=True, Action=E

RightParent = KeyVal

“How many information

sources have we found to support the casualty count

for the IED incident in row 102?” we can apply the

view_origins() predicate to the provenance graph for

the data value “Injured” in the row for incident 102.

“What other names did column Long in this table have

in earlier versions of the data you gathered?”

answered by building a provenance tree for each data

view_attribnames() on each provenance graph. “What

information about incident 109, if any, was derived

ferently than the rest?” can be answered by using

make_ptree() to construct provenance trees for each

data value in the selected row, then co

puting the tree

edit distance [8] for each possible pairing of these va

ues, and looking at the distribution of edit di

tances.

Provenance queries can also be written against the

history table using the recursion capabilities supported

in SQL:1999. As an example, to answer the question,

“Where did we find the date of incident 105?”

can write the following query, implicitly selecting the

fier of the row for incident 105 (called X in

this example) via a user inte

WITH RECURSIVE

Value_Events(KeyVal, AttrName, Action, Pr

ferred,

LeftParent, RightParent, Source)

(SELECT KeyVal, AttrName, Action, Preferred, Lef

Parent, RightParent, Source

FROM History h

WHERE h.KeyVal = X

![8 eqnum) UNION story AND h 6. copy-and-au-mber of updates made to each value, and c the average number of - 1, be-s N + N - 1 podent overhead for storinnclude a ss-n-snkhstor kh [10,11] ade-nse-pctions: copy-and-pau-de diso-o--e-collaborating teams. Orchestra employs a detailed y-rtial n-](./background8.png)

AND h.AttrName = “Date”

AND h.SeqNum =

(SELECT MAX (S

FROM History h

WHERE h.KeyVal = X

AND h.AttrName = “Date”

AND h.Action != “Confirm”))

(SELECT KeyVal, AttrName, Action, Preferred,

LeftParent, RightParent, Source

FROM Hi

h, Value_Events v1

WHERE (h.KeyVal = v1.LeftParent

AND v1.Preferred = Left

AND h.AttrName = v1.AttrName

AND h.Action = E) OR

(h.KeyVal = v1.RightParent

AND v1.Preferred = Right

AND h.AttrName = v1.AttrName

.Action = E) OR

(h.AttrName = v1.LeftParent

AND v1.Preferred = Left

AND h.KeyVal = v1.KeyVal

AND h.Action = A) OR

(h.AttrName = v1.RightParent

AND v1.Preferred = Right

AND h.KeyVal = v1.KeyVal

AND h.Action = A))

SELECT Source

FROM Value_Events v2

WHERE Source != ""

Overhead of Provenance Storage

Provenance overhead grows with the number of user

actions. If a dataset is constructed using individual

paste actions, then the history t

ble grows

by one entry per data value pasted into the data table,

plus entries for each update, confirmation, and resol

tion later affecting that data value. Let N be the nu

of data values pasted by the user, r the average number

confirmations applied to each value. Note

that the number of resolutions cannot exceed N

cause once a pair of values is resolved to form a new

value, the original pair becomes non

visible and cannot

be resolved further. The number of hi

tory table entries

may be as large as

(due to initial population)

+ N * r

(due to revisions)

+ N * c

(due to confirmations)

(due to resolutions)

Then the number of history table entries is less than

(2 + r + c) N

Revisions (r) and confirmations (c) per data value are

tentially unlimited, but in practice, we expect that

each data value will only be revised or confirmed a

small number of times, and that number is indepen

of the total number of data values in the table. The

g provenance also must i

factor for the size of each hi

tory entry relative to the

average size of each data value. The size of a history

entry is constant in our implementation, and if we a

sume that the average size of a data value is also a co

tant, we can express the ratio of these as a co

stant

. This lets us express a bound on the overhead for

ing provenance as

* ((2 + r + c) N), or O(N)

7. Related Work

Buneman, Chapman, Cheney, and Vansummeren

dress provenance for manually curated data

in scientific disciplines. Their work shares many of the

same goals as ours. They address two forms of prov

nance (“where” and “why” [17]), but do not seem to

address the ability to reco

struct the history of actions

affecting data values (“what”). However, if hi

torical

data values and actions were included in their prov

nance tables, the expressive power of our a

proach and

theirs, with regard to updates, would be the same. Their

work addresses a limited set of user a

ste and insertion (of which we call updates), and

deletion. Our user action model includes entity resol

tion, attribute resolution (schema modification), and

confirmation, though it does not at present include

letions.

Substantial work has been reported on computing

provenance of data exchanged between structured,

tributed scientific data stores. Orchestra [4], a prot

type Collaborative Data Sharing System (CDSS), pr

vides a general

purpose platform for data sharing b

tween structured data sources maintained by distinct

provenance model based on pol

nomials in semi

rings

[12], where each term in a polynomial describes a pa

mapping from source relations to target relations. The

polynomial approach allows Orchestra to track several

ancestors for one tuple, much like CHIME does for i

dividual data values. As a result, Orchestra and CHIME

![9 ey-nomial. Or-chestra computes provenance on a per--Second, Orchestra focuses on only mappings ex-mappings expressed at the individual data-mputed when ex-st-ting, we conc-te-rnrtuple that originated from anothem -e-age function atime an operation is performed. Both CHIME and -e-age compue-con a data value is by definition cycle-atable, which also enables other functionality, while ULDBs appear to stdiffers in that CHIME retains provenance on a per- AutoMed [14] defines the notion of schema-a-tion and integration in a relational (data wenvination e- We have defined a new data-mananclud-tameans, our data curation setting permits the prove-c-x-at-a-time CHIME, supporting copy-and-paste, drag-resolution, and search- intero-lution, attribute resolution, and de-creProlog implementation for creation and querying of prove has exactly on-c-i the high--y necessi-tates relaxing integration throughput. Other ap-secution of a set of pre-mp Acknow - n-ty-Fifth ACM SIGMOD-SIGACT-SIGART Sympo-](./background9.png)

support trust evaluation based on whether a user trusts

the various sources described in the prov

nance pol

rchestra also differs from our work. First, O

tuple basis,

while we compute provenance on a per

value basis.

pressed at the schema level, while our work focuses on

value level.

In Orchestra, provenance data is co

plicit “update exchange” events are i

sued. In our se

tinuously record the history of user a

tions, and at any time allow the user to construct from

his history a provenance tree to support useful qu

ries. We also have a richer model for user confi

mation,

where the user can explicitly co

firm a value; Orchestra

has a simpler negative confi

mation at the tuple level: a

r site, once deleted

locally, will not be i

ported again.

Uncertainty

Lineage Databases (ULDBs) [13] store

the lineage of database tuples along with data. A lin

is defined for each rel

tional operator

available in the database, and lineage for each tuple is

computed using the appropriate lineage functions each

ULDBs rely on well

behaved lineage: For example, lin

tation must be free of cycles. CHIME pr

vents cycles in lineage because the set of user a

tions

free. Our work

differs from the ULDB approach in that the source data

for our provenance calcul

tions are stored as a history

ore only lineage. Our work also

value basis rather than per tuple.

transformation pathways that express data transform

arehouse)

ronment. In AutoMed, transformation pathways

are evolved dynamically to record the evolution of the

warehouse schema. Li

eage tracing of tuples may be

derived from this pathway data. Our work is similar, but

at the granularity of data values rather than tuples. We

retain a history table that resembles the transform

pathways of AutoMed, and use it to compute prov

nance of individual data values, much as AutoMed

does for tuples.

8. Conclusions and Future Work

gement setting

distinct from traditional information integration (i

ing update exchange). We have shown how the history

of user actions in our se

ting defines the Where, What,

and Why provenance of the integrated dat

sets users

create in that setting. Whether by automated or manual

nance of sets of data values, including data from query

answers or views, to be captured as long as the colle

tive gathering work can be e

pressed in a set of value

actions.

Using this model, we have shown how to answer

user questions about data provenance. To test our

ideas, we have implemented a prototype version of

drop, entity

browse with a Windows user

face; a Prolog implementation supporting all user

actions defined here (update, confirmation, entity res

resolution), that

ates the user data table and history table; and a

nance trees from history tables, as described in

this paper.

At present, the CHIME data model is limited to first

normal form. That is, though an attribute value may

have a history of several values, at any point in time it

ne value. As Halevy, Franklin, and Maier

point out, a realistic dataspace model must represent

inconsistent and u

certain attribute values [2]. We are

currently extending this work to cover representation

and manipulation of such non

first normal form stru

tures, incorporating some of the ideas of Jaeschke and

Schek [15] and Arisawa, Moriya, and M

ura [16].

CHIME provides integration that takes advantage of

quality decisions derivable from direct user

actions. This trade

off in favor of high qualit

proaches, such as Orche

tra [4], favor enabling high

throughput by automated ex

constructed TGDs against inco

ing data sets. We plan

to explore blending these a

proaches.

ledgments

This work was supported in part by NSF grant IIS

0534762 and by DARPA.

References

[1] Halevy, A., Franklin, M., and Maier, D. “Principles

of dataspace systems,” In Proceedings of the Twe

![10 m nnen, V. “Update exchange with mappings and prove-nance,” In col-n- (SIG “UpIn Proceedingr-En ery-day, Ima-tion Technology liza-n-ation,” In 2008 problems” Pro-ceedings of the Seventeenth ACM SIGACT-SIGMOD-a-tab n-u-rated data,” In kshop2006. ve-Pro-a ve-nance semirings,” In Proceedings of the Twenty-Sixth ACM SIGMOD-SIGACT- (PODS '07). o-r- 17 [14] s-Lec- Proceedi-n- a-First--Form Rernational VLDB En ianu, -Verlag, Lon [18] Buneman, P., Cheney, J., Tan, W. C., Vansum-the 27th ACM SIGACT-Principles of Database Systems2008. Proceedings r-i](./background10.png)

sium on Principles of Database Systems (PODS '06).

ACM, 2006.

[2] Fagin, R., Kolaitis, P. G., Miller, R. J., and Popa, L.

“Data exchange: semantics and query answering,”

Theoretical Co

puter Science 336, 2005.

[3] Green, T. J., Karvounarakis, G., Ives, Z. G., Ta

Proceedings of the 33rd international

Conference on Very Large Data Bases (VLDB ’07).

VLDB Endowment, 2007.

[4] Green, T., Karvounarakis, G., Taylor, N., Biton, O.,

Ives, Z., Tannen, V. “ORCHESTRA: facilitating

laborative data sharing,” In Proceedings of the 2007

ACM SIGMOD international Conference on Ma

agement of Data

MOD '07). ACM, 2007.

[5] Green, T., Karvounarakis, G., Ives, Z., Tannen, V.

date exchange with mappings and provenance,”

s of the 33rd international Confe

ence on Very Large Data Bases (VLDB’07). VLDB

dowment, 2007.

[6] Archer, D., Delcambre, L. “Capturing Users’ Ev

plicit Information Integration Decisions,”

Conferences in Research and Practice in Inform

vol. 83. Auckland, NZ, 2007.

[7] Archer, D., Delcambre, L. “Definition and Forma

tion of Entity Resolution Functions for Everyday I

formation Integr

Proceedings of SDKB

, Nantes, France, 2008.

[8] Bille, P. “A survey on tree edit distance and related

Theoretical Computer Science 337, 2005.

[9] Abiteboul, S. and Duschka, O. M. “Complexity of

answering queries using materialized views,” In

SIGART Symposium on Principles of D

ase Systems (PODS '98). ACM, 1998.

[10] P. Buneman, A. Chapman, J. Cheney, and S. Va

summeren. “A provenance model for manually c

Proceedings of the International

Provenance and Annotation Wor

(IPAW’06),

[11] Buneman, P., Chapman, A., and Cheney, J. “Pro

nance management in curated databases,” In

ceedings of the 2006 ACM SIGMOD intern

tional

Conference on Management of Data (SIGMOD '06).

ACM, 2006.

[12] Green, T., Karvounarakis, G., Tannen, V. “Pro

SIGART Symposium

on Principles of Database Systems

ACM, 2007.

[13] Benjelloun, O., Das Sarma, A., Halevy, A., The

bald, M., Widom, J. 2008. “Databases with unce

tainty and lineage,” VLDB Journal

, 2, 2008.

Fan, Hao, Poulovassilis, A. “Using schema tran

formation pathways for data lineage tracing,”

ture Notes in Computer Science 3567, 2005.

[15] Jaeschke, G., Schek, H. “Remarks on the Algebra of

Non First Normal Form Relations,” In

ngs of

the 1st ACM SIGACT

SIGMOD Symposium on Pri

ciples of Database Systems (PODS '82), ACM, 1982.

[16] Arisawa, H., Moriya, K., and Miura, T. “Oper

tions

and Properties on Non

Normal

lational

Databases,” In Proceedings of the 9th inte

Conference on Very Large Data Bases (VLDB ’83)

dowment, 1983.

[17] Buneman, P., Khanna, S., Tan, W. C. “Why and

Where: A Characterization of Data Provenance,” In

Proceedings of the 8th International Conference on

Database Theory (2001). J. V. Bussche and V. V

Eds. Lecture Notes In Computer Science, vol. 1973.

Springer

don, 2001.

meren, S. “Curated Databases,” In Proceedings of

SIGMOD Symposium on

(PODS'08), ACM,

[19] Buneman, P. “Curated Databases,” In

of the 4th International Conference on Web Info

mation Systems Eng

neering (WISE’03), 2003.