|

|

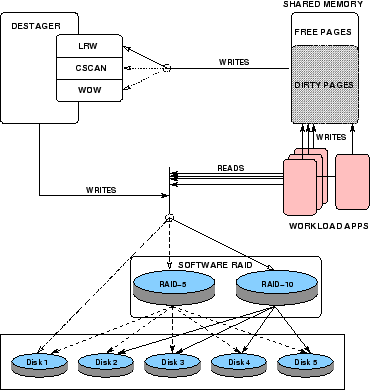

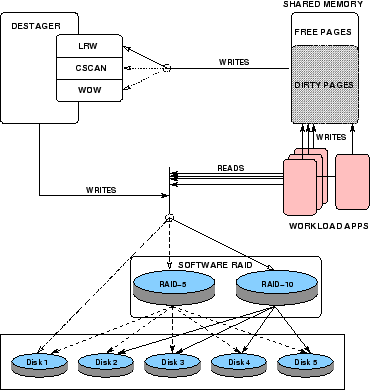

A schematic description of the experimental system is given in Figure 5.

The workload applications may have multiple threads that can asynchronously issue multiple simultaneous read and write requests. We implemented the write cache in user space for the convenience of debugging. As a result, we do have to modify the workload applications to replace the ``read()'' and ``write()'' system calls with our versions of these after linking our shared memory management library. This is a very simple change that we have implemented for all the workload applications that we used in this paper.

For a single disk, NVS was managed in units of 4KB pages. For RAID-5, NVS was managed in terms of 256KB stripe write groups. Finally, in RAID-10, NVS was managed in terms of 64KB strip write groups. Arbitrary subsets of pages in a write group may be present in NVS. Write requests that access consecutively numbered write groups are termed sequential.

On a read request, if all requested pages are found in NVS, it is deemed a read hit, and is served immediately (synchronously). If, however, all requested pages are not found in NVS, it is deemed a read miss, and a synchronous stage (fetch) request is issued to the disk. The request must now wait until the disk completes the read. Immediately upon completion, the read request is served. As explained above, the read misses are not cached.

On a write request, if all written pages are found in NVS, it is deemed a write hit, and the write request is served immediately. If some of the written pages are not in NVS but enough free pages are available, once again, the write request is served immediately. If, however, some of the written pages are not in NVS and enough free pages are not available, the write request must wait until enough pages become available. In the first two cases, the write response time is negligible, whereas in the last case, the write response time can become significant. Thus, NVS must be drained so as to avoid this situation if possible.

We have implemented a user-space destager program that chooses dirty pages from the shared memory and destages them to the disks. This program is triggered into action when the free pages in the shared memory are running low. It can be configured to destage either to a single physical disk or to the virtual RAID disk. To decide which pages to destage from the shared memory, this program can choose between the three cache management algorithms: LRW, CSCAN, and WOW.