Matt Miller

Leviathan Security Group

One of the most critical steps of any security review involves identifying the trust boundaries that an application is exposed to. While methodologies such as threat modeling can be used to help obtain this understanding from an application's design, it can be difficult to accurately map this understanding to an application's implementation. This difficulty suggests that there is a need for techniques that can be used to gain a better understanding of the trust boundaries that exist within an application's implementation.

To help address this problem, this paper describes a technique that can be used to model the trust boundaries that are created by securable objects on Windows. Dynamic instrumentation is used to generate object trace logs which describe the contexts in which securable objects are defined, used, and have their security descriptor updated. This information is used to identify the data flows that are permitted by the access rights granted to securable objects. It is then shown how these data flows can be analyzed to gain an understanding of the trust boundaries, threats, and potential elevation paths that exist within a given system.

One of the most critical aspects of any application security review is the process of modeling an application's trust boundaries. This knowledge allows an auditor to understand how domains of trust are able to influence one another. Without this knowledge, an auditor is generally not be able to easily identify the components of an application that may be exposed to untrusted data. As such, an application's trust boundaries must be understood in order to accurately characterize the threats that exist.

A common methodology that can aide this process is threat modeling which has been popularized by Microsoft in recent years as a component of the Security Development Lifecycle (SDL)[10]. Threat modeling provides an auditor with a framework for describing and reasoning about the trust boundaries that exist within an application's design. While threat modeling can help provide an understanding of an application's as-designed security, it is not as adept at providing an understanding of an application's as-implemented security. For instance, an auditor may find it difficult to use threat modeling to express the trust boundaries that are created based on artifacts of an implementation. These deficiencies point to a need for techniques that can be used to improve an auditor's understanding of the trust boundaries that exist within an application's implementation.

A good example of an implementation artifact that can be difficult to capture from an application's design is the way in which an application interacts with securable objects such as files, events, and processes. Securable objects, shortened to objects henceforth, are used by Windows to provide an abstraction for various resources[12]. Each object is an instance of an object type and can be assigned a security descriptor. A security descriptor is used by Windows to describe the access rights security identifiers (SIDs) are granted or denied to a given object. These rights can be mapped to the influences each SID may have on other SIDs when using a given object. For example, the ability for all users to write to a registry key whose values are read by an administrative user makes it possible for all users to pass data to, and thus influence, an administrative user. This paper provides an approach that can be used to model and reason about these influences in terms of the data flows permitted by securable object access rights.

The approach described in this paper is composed of two parts. The first part (§2) involves using dynamic instrumentation to generate object trace logs which provide the raw data needed to analyze the data flows permitted by securable object access rights. The second part (§3) involves interpreting the object trace log data to analyze data flows, trust boundaries, threats, and potential elevation paths.

The primary motivation for this paper is to provide tools and techniques needed to allow a security auditor to model and reason about the trust boundaries created by securable objects. While there are many other types of trust boundaries that can exist within an application, such as those related to network connectivity and system calls, this paper focuses strictly on securable objects. In this vein, the specific contributions in this paper include:

There has been significant work done on developing software verification techniques that focus on determining that a program satisfies a given specification[4,2,15,8]. These techniques have also been applied to help support software security analysis[6,3,10,1]. The work presented in this paper may aide software security analysis by automatically deriving an understanding of the data flows permitted by securable object access rights as obtained from a program's implementation. For example, this understanding could be used to help provide raw data for determining threat model conformance with Reflexion models[1].

Previous work has also shown that specific instances of privilege escalations can be detected by using a logical model of the Windows access control system to analyze the access rights assigned to persistent file, registry key, and service objects[14,7]. This paper extends this work by using dynamic instrumentation to collect access control information for all securable object types.

The access rights associated with a given object define the extent to which each SID can influence one another. As such, the first step to understanding these influences involves collecting access right information for all objects. This paper uses a combination of two approaches to obtain this information for both persistent and dynamic objects. The data collected by both approaches is ultimately written to one or more object trace logs which provide the raw input used in §3.

Persistent objects are non-volatile objects that may exist before a system has booted. The most prevalent persistent objects are files, registry keys, and services. The access rights associated with each of these objects can be obtained using functionality provided by the Windows API[12]. Specifically, GetNamedSecurityInfo can be used for files, GetSecurityInfo can be used for registry keys, and QueryServiceObjectSecurity can be used for services. It is suspected that this approach likely mirrors that which was used in previous work[7].

Dynamic objects include both volatile and non-volatile objects that are defined while a system is executing. Examples of dynamic objects include sections, events, and processes. The access rights associated with dynamic objects can be obtained by dynamically instrumenting the object manager in the Windows kernel. Dynamic instrumentation makes it possible to collect information about the contexts in which objects are defined, used, and have their security descriptor updated. This information can be used to better distinguish between access rights that can be granted to a SID, such as by a security descriptor, and access rights that are granted to a SID, such as when a SID defines or uses an object.

Every object that is defined on Windows must first be allocated and initialized by ObCreateObject. By instrumenting ObCreateObject, it is possible to log information about the context that defines a given object. This contextual information includes the calling process context, active security tokens, initial security descriptor (if present), and call stack. It is implicitly assumed that the SID responsible for defining an object is granted full rights to the object.

When an application uses an object it must typically create a handle to the object by issuing a call to a routine that is specific to a given object type, such as NtOpenProcess. These routines ultimately make use of functions provided by the object manager, such as ObLookupObjectByName and ObOpenObjectByPointer, to actually acquire a handle to the object. While there are many places that could be instrumented, there are two options that have superior qualities: hooking the OpenProcedure of each object type or using object manager callbacks. This paper will only discuss the use of object manager callbacks.

Object manager callbacks are a new feature in Windows Vista SP1 and Windows Server 2008[11]. This feature provides a new API, ObRegisterCallbacks, which allows device drivers to register a callback that is notified when handles to objects of a given type are created or duplicated. While this API would appear to be the perfect choice, the default implementation only allows callbacks to be registered for process and thread object types (PsProcessType and PsThreadType). Fortunately, this limitation can be overcome by dynamically altering a flag associated with each object type which enables the use of ObRegisterCallbacks. Once registered, each callback is then able to log information about the context that uses a given object such as the calling process context, active security tokens, assigned security descriptor, granted access rights, call stack, and object name information.

The SecurityProcedure function pointer associated with each object type must be instrumented in order to detect alterations to an object's security descriptor. The SecurityProcedure of an object type is called whenever an object's security descriptor is modified during the course of an object's lifetime, such as through a call to SetKernelObjectSecurity. This allows each instrumented security procedure to log information about the security descriptor that is assigned to individual objects during the course of execution.

In addition to instrumenting object definitions, uses, and security descriptor updates, it is also helpful to instrument the memory mapping of loadable modules. This can be accomplished by using PsSetLoadImageNotifyRoutine to register a callback that is notified whenever a module is mapped into the address space of a given process. This gives the callback an opportunity to log information about the base address and image size of each mapping as an attribute of a process object. Memory mapping information is needed in order to determine what image file the return address of a given call stack frame is contained within when interpreting call stack information.

Object trace log data can be used to better understand how SIDs are able to use objects to influence one another. These influences can be modeled by describing the flow of data between SIDs as permitted by the access rights each SID is granted to a given object. To illustrate this, §3.1 shows how a data flow graph (DFG) can be used to describe the permitted data flow behavior of a system. §3.2 then describes how a DFG can be generated by interpreting object trace log data. Finally, §3.3 shows how trust boundaries and threats can be derived from a given DFG.

A data flow graph

![]() relates a data definition context

relates a data definition context ![]() with a data use context

with a data use context ![]() such that

such that

![]() . Each vertex is defined as a tuple

. Each vertex is defined as a tuple

![]() where

where ![]() is an actor that belongs to a domain of trust,

is an actor that belongs to a domain of trust, ![]() is a medium through which data is flowing, and

is a medium through which data is flowing, and ![]() is a verb that describes how data is transferred. Each verb is defined as

is a verb that describes how data is transferred. Each verb is defined as

![]() where

where ![]() is the name of the verb,

is the name of the verb, ![]() is a set of matching criteria, and

is a set of matching criteria, and ![]() is a set of threats posed to an actor that makes use of the verb. A data flow

is a set of threats posed to an actor that makes use of the verb. A data flow

![]() exists whenever

exists whenever ![]() and

and ![]() are using complementary verbs

are using complementary verbs

![]() to operate on related mediums

to operate on related mediums

![]() .

.

In the context of this paper, each vertex in a DFG takes on a more precise definition. Specifically, ![]() represents a SID or a group of SIDs,

represents a SID or a group of SIDs, ![]() represents an object instance, and

represents an object instance, and ![]() represents a verb that is specific to the object type of

represents a verb that is specific to the object type of ![]() . A verb's matching criteria

. A verb's matching criteria ![]() describes the access rights that must be granted for a SID to operate on an object. Using these definitions, a data flow exists whenever

describes the access rights that must be granted for a SID to operate on an object. Using these definitions, a data flow exists whenever ![]() and

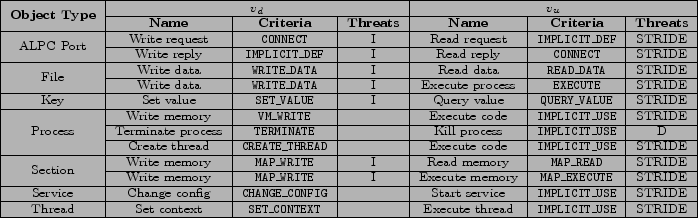

and ![]() are using complementary verbs, such as those found in figure 1, to operate on the same medium

are using complementary verbs, such as those found in figure 1, to operate on the same medium

![]() . For example, a definition

. For example, a definition

![]() and a use

and a use

![]() illustrates a data flow where S-1-1-0 writes a request to LsaPort which is then read by S-1-5-18.

illustrates a data flow where S-1-1-0 writes a request to LsaPort which is then read by S-1-5-18.

|

A DFG can be generated by interpreting object trace log records that contain information about the access rights individual SIDs are granted to each object. This information exists in log records that describe when an object is defined, used, or has its security descriptor updated.

When an object is defined, a vertex is created for each verb associated with the object's object type, with the exception of verbs having the criteria IMPLICIT_USE. This is meant to capture the fact that the definer of an object is implicitly granted full access to the object and is thus capable of using all verbs. Each vertex is created in terms of the context that defined the object where ![]() is either the Owner of the object's security descriptor or the active security context's client token or primary token owner SID,

is either the Owner of the object's security descriptor or the active security context's client token or primary token owner SID, ![]() is the object being defined, and

is the object being defined, and ![]() is the verb whose criteria was satisfied. For example, the dynamic definition of an ALPC port object would lead to the creation of a vertex

is the verb whose criteria was satisfied. For example, the dynamic definition of an ALPC port object would lead to the creation of a vertex

![]() .

.

When an object is used, a vertex is defined for any ![]() that matches the access rights granted to the SID that uses the object. The owner SID and the primary group SID of either the client or primary thread token represent the actors that are involved. For example, acquiring a handle to a registry key with granted rights of KEY_SET_VALUE would lead to the creation of a vertex

that matches the access rights granted to the SID that uses the object. The owner SID and the primary group SID of either the client or primary thread token represent the actors that are involved. For example, acquiring a handle to a registry key with granted rights of KEY_SET_VALUE would lead to the creation of a vertex

![]() .

.

When an object's security descriptor is assigned or updated, zero or more vertices may be created as a result. Log records that provide information about an object's security descriptor can be interpreted by enumerating the access control entries (ACEs) contained within the discretionary access control list (DACL) of the object's security descriptor. Each ACE contains information about the rights granted to a SID for a given object. A security descriptor with a null DACL can be interpreted as granting full access to all SIDs. If the rights granted to a SID meet the criteria of a given ![]() then a vertex can be defined where the actor is the SID that is derived from the corresponding ACE. For example, an ACE that grants the SID S-1-5-18 the KEY_QUERY_VALUE access right would lead to the creation of a vertex

then a vertex can be defined where the actor is the SID that is derived from the corresponding ACE. For example, an ACE that grants the SID S-1-5-18 the KEY_QUERY_VALUE access right would lead to the creation of a vertex

![]() .

.

The vertices that are created as a result of this process can be combined together to form data flows as described in §3.1. A data flow may also be created in circumstances where the verb of a given vertex is related to a verb having the criteria IMPLICIT_USE. This criteria captures behavior that implicitly follows from a definition. For example, the act of writing data into a process address space can implicitly lead to the execution of the injected data as code.

Once generated, a DFG can be analyzed to derive a number of properties including the set of trust boundaries that exist, the potential threats posed to each domain of trust, and the risks posed to specific regions of code.

For the purpose of this paper, a trust boundary is defined as a medium, ![]() , that allows data to flow between domains of trust. The set of trust boundaries

, that allows data to flow between domains of trust. The set of trust boundaries

![]() that exist within a DFG can be derived from the subset of data flows where the definition and use actors are not equal such that

that exist within a DFG can be derived from the subset of data flows where the definition and use actors are not equal such that

![]() given

given ![]()

![]()

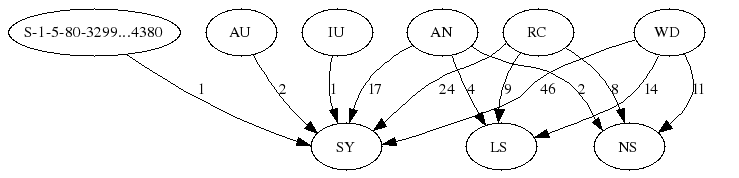

![]() . In other words, a data flow involving different domains of trust must implicitly cross a trust boundary. The subset of data flows that cross a trust boundary compose a trust boundary data flow graph (TBDFG). Figure 2 provides a summary of a TBDFG where each edge conveys the number of data flows, and thus potential elevation paths, involving

. In other words, a data flow involving different domains of trust must implicitly cross a trust boundary. The subset of data flows that cross a trust boundary compose a trust boundary data flow graph (TBDFG). Figure 2 provides a summary of a TBDFG where each edge conveys the number of data flows, and thus potential elevation paths, involving ![]() and

and ![]() .

.

|

The flow of data between domains of trust can lead to threats such as elevation of privilege and denial of service as categorized by STRIDE[10]. Determining which data flows pose a threat is entirely dependent on the perspective of a domain of trust. In the following descriptions, the relation operator ![]() can be interpreted as less privileged than.

can be interpreted as less privileged than.

From a defensive perspective, a defense horizon can provide an understanding of the threats posed to a given domain of trust, ![]() . A defense horizon is composed of the subset of data flows which may result in other domains of trust threatening

. A defense horizon is composed of the subset of data flows which may result in other domains of trust threatening ![]() with a set of threats

with a set of threats ![]() 1 . This is captured by

1 . This is captured by

![]() given

given

![]() .

.

Conversely, the attack horizon for a domain of trust can provide an understanding of the threats posed by a given domain of trust. An attack horizon is composed of the subset of data flows which may result in ![]() threatening other domains of trust with a set of threats

threatening other domains of trust with a set of threats ![]() . This is captured by

. This is captured by

![]() given

given

![]() .

.

Data flows can be further classified in terms of whether or not they are actualized. An actualized data flow exists whenever the ![]() vertex was created as a result of the access rights granted when an object was dynamically defined or used. On the other hand, a potential data flow exists whenever the

vertex was created as a result of the access rights granted when an object was dynamically defined or used. On the other hand, a potential data flow exists whenever the ![]() vertex was created as a result of the access rights granted by an object's security descriptor.

vertex was created as a result of the access rights granted by an object's security descriptor.

Actualized data flows are interesting from an analysis perspective because they represent threats that can be immediately acted upon. Potential data flows are more difficult to interpret from an analysis perspective as they may never become actualized. For example, the ability for all users to write to a file that can be executed by an administrator produces a potential data flow with a threat that could allow all users to elevate privileges to administrator. However, this is predicated on the administrator actually executing the file which may never occur in practice.

It is not always easy to determine what code is responsible for exposing a trust boundary when assessing the security of a program. This determination can be made easier by taking into account the call stacks that are logged to an object trace log when an object is defined or used. This data makes it possible to determine how different areas of code contribute to a program's overall risk. This understanding may benefit traditional program analysis by helping to scope analysis to areas of a program with higher risk attributes based on their exposure to a trust boundary. This data could also be used to support security metrics that relate to code exposure[5,9].

To better illustrate how this model can be useful, it is helpful to consider some of the ways in which it can be applied.

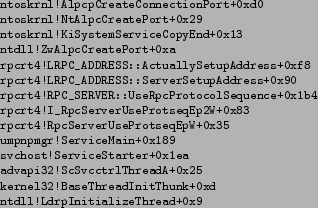

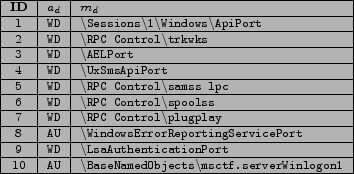

ALPC ports represent a good target for elevation of privilege attacks due to their client-server nature. Figure 3 provides a subset of the ALPC port data flows that compose the defense horizon for SYSTEM on a default installation of Windows Vista SP1. When analyzing these data flows it is possible to determine what code was responsible for exposing a given trust boundary by inspecting the call stack that was captured at the time that a server-side ALPC port object was defined. This allows an auditor to quickly identify code that may be at risk. For example, the following call stack lead to the creation of a trust boundary through

![]() RPC Control

RPC Control

![]() plugplay from figure 3.

plugplay from figure 3.

|

A privilege elevation can occur whenever a low-privileged SID is allowed to change the configuration of a service. As a result, a lesser privileged SID can execute arbitrary code with the privileges of SYSTEM since it is possible to alter the image file of the service and the credentials that the service executes with. This allows a given SID to threaten to elevate privileges to SYSTEM. In other words, a data flow exists such that

![]() and

and

![]() whenever

whenever

![]() . The default installation of Windows Vista SP1 has no data flows that enable this specific elevation path. Previous work has also shown how this elevation path can be detected[7].

. The default installation of Windows Vista SP1 has no data flows that enable this specific elevation path. Previous work has also shown how this elevation path can be detected[7].

An application's trust boundaries and data flows must be understood in order to identify relevant threats. Threat modeling is a valuable tool that can be used to help provide this understanding of an application's design. Still, it can be difficult to map this design understanding to an application's actual implementation. This can lead to divergences in one's understanding of the threats that actually exist. It can also impact an auditor's ability to know which components may encounter untrusted data. These deficiencies point to the need for techniques that can help to derive trust boundary information from an application's implementation.

This paper has shown how to model the trust boundaries that are created by securable objects on Windows. Dynamic instrumentation was used to create object trace logs which contain information about the contexts in which securable objects are defined, used, and updated. The object trace log data was then used to model and reason about the data flows, trust boundaries, and threats permitted by securable object access rights. Future work will attempt to extend this model to other types of trust boundaries in an effort to gain a more complete understanding of the trust boundaries that exist within a given system.

This document was generated using the LaTeX2HTML translator Version 2002-2-1 (1.71)

Copyright © 1993, 1994, 1995, 1996,

Nikos Drakos,

Computer Based Learning Unit, University of Leeds.

Copyright © 1997, 1998, 1999,

Ross Moore,

Mathematics Department, Macquarie University, Sydney.

The command line arguments were:

latex2html -split 0 -show_section_numbers -local_icons -no_navigation draft

The translation was initiated by on 2008-07-13