|

| |||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||||||

Paper - Proceedings of the 3rd USENIX Windows NT Symposium,

July 12-15, 1999, Seattle, Washington

[Technical Program]

Reducing Startup Latency in Web and Desktop ApplicationsDennis Lee, Jean-Loup Baer, Brian Bershad, and Tom Anderson Date:

AbstractApplication startup latency has become a performance problem for both desktop applications and web applications. In this paper, we show that much of the latency experienced during application startup can be avoided by more efficiently packing application code pages. To take advantage of more efficient packing, we describe the implementation of demand paging for web applications. Finally, we show that combining demand paging with code reordering can improve application startup latency by up to 58%.

IntroductionProgram startup latency is an important performance problem for both desktop and web applications. Desktop applications are becoming larger and improvements in disk speeds have not kept up with the improvements in CPU speed. For example, Microsoft Word 2.0 on an Intel 66 Mhz 486 takes about 14 seconds to start, while Microsoft Word 7.0 on a 200 Mhz Pentium Pro occasionally takes 17 seconds to start. The situation is worse for web applications on the Internet. For many users, web applications take a long time (a few minutes) to start because they connect to the Internet through high latency, low bandwidth links (i.e., modems). For example, Vivo, a popular video display and control application, takes over 8 minutes to download over a 56 Kbps modem link and over 2 minutes on a 256 Kbps DSL link. A similar problem occurs in the context of corporate intranets: mobile professionals frequently download new versions of internal corporate web applications and experience frustrating wait times for these applications.

Researchers have recently proposed several ways for improving startup latency including compression [Enst et al. 97,Franz & Kistler 97], non-strict execution [Krintz et al. 98], just-in-time code layout [Chen & Leupen 97], and optimizing disk layout [Melanson 98] . We propose an orthogonal technique that uses code reordering [Pettis & Hansen 90] and demand paging [Levy & Redell 82] to improve the startup latency of web and desktop applications. Our approach essentially improves startup time by improving the effectiveness of demand paging systems: we place procedures that will probably be used by the application into a single contiguous block in the binary. This approach can improve the startup latency of web applications by more than 58% and that of desktop applications by more than 45%.

The rest of this paperSection 2 presents the motivation and architecture for improving application startup latency. Section 3 describes our experimental methodology and setup. In Section 4, we present the results of our measurements. Finally, Section 5 concludes.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

Web Applications

|

We profiled four web applications and five desktop applications 1 (c.f., Table 1) to determine if there was an opportunity to improve startup time by improving the layout of procedures in a program binary.

| ||||||||||||||||||||||||||||||||||||||||||

Table 2 shows the statistics derived from profiling four web applications. We ran these applications to completion with a typical workload to determine how many procedures in the application are actually used. The table shows that the applications utilize only between 38% (envoy) and 84% (scout) of the bytes in their program binaries. Typically, web applications download their entire program binaries before starting. The utilization statistics suggest that startup times could be significantly improved if we download only the procedures actually used by the application.

Table 3 shows statistics for code pages of different desktop applications during startup. The binary for desktop applications is demand paged so we examine the utilization of the code pages brought in during startup. The table shows that only 26% ( netscape) to 47% (word) of these pages are utilized. Interestingly, netscape and photoshop touch almost every code page in their main binary during startup suggesting that even with demand paging the entire application is loaded to memory from disk. The low page utilization numbers for all applications suggest that like web applications, startup latency can be improved by bringing in only the actual procedures used by the application.

| |||||||||||||||||||||||||||||||||||

The previous subsection showed that much of the code transferred over the network or transferred from the disk is not used by the application. Our approach uses profile information and an object rewriting system to move the likely-used procedures in the binary together. Essentially, pages get packed better to allow the demand paging system to transfer only the used procedures in the application.

Figure 1 shows a diagram of the object rewriting phases of our approach. We use profile information to predict with high accuracy which part of the application would be used. Using an object rewriting engine, we then move the likely-used procedures together at the top of the code section. For our experiments, we simply arrange the code section in first-touch order. Ordering using procedure affinity [Pettis & Hansen 90] might be better for locality but first touch order works well enough for our goal of improving startup latency.

For desktop applications, the system is done after generating the binary from the code reordering phase. The built-in O/S demand paging system will load in only the fraction of the pages containing the used procedures.

For web applications, the system needs to be able to download only the part of the application required for execution. The code-splitting module splits the binary into (1) a large main binary that contains the data portion of the binary and the likely-used procedures, and (2) several page-sized files containing the unlikely-used procedures. At runtime, when the client requests the web application, the server only transfers the main binary. When the client needs an unlikely-used procedure, it has to request the page-sized file that contains the procedure.

Our client architecture extends demand paging to web applications. This provides a convenient mechanism to detect missing pages and to allow the system to function correctly when control passes to functions that are not present in the initial download.

Figure 2 shows a diagram of what happens on the web client during program runtime. When a web application is accessed by the client browser, only pages containing the data and likely-used portion of the binary are downloaded. The part of the binary that has not been downloaded is marked PAGE_NO_ACCESS by the system.

If the application transfers control to a page that is marked PAGE_NO_ACCESS, the page-fault handler is invoked. We modified the handler to contact the web server, download the file containing the page, and place the page in the appropriate location in application memory. 2

To determine the startup latency, our timing system (1) invokes the application, and (2) simulates a user initiated event by sending a message to an application window. We define startup latency as the time from the invocation of the application to the time the application replies to the message sent by the timing system.

Our timing method works because of the Windows NT event queue model and the way most Windows applications are written. Under Windows NT, windows are assigned to threads, and messages are sent to thread-local event queues. Messages are delivered to this queue and do not interrupt the execution of the owning thread. For most applications, threads process their message queue only when they have finished initializing and are ready to respond to user input.

Occasionally, a thread responsible for the main application window will respond to a user message even when other threads are still drawing other windows (e.g., tool bar windows). Since users are unlikely to interact with the application until after all the initial windows have been drawn, the timing system sends a message to the last window drawn instead of the main application window.

For our web server, we use Apache 1.3b5 running on FreeBSD 2.2.6. To control the bandwidth and latency between the web server and the client, we installed the dummynet [Rizzo 99] patch to the BSD kernel. Our Internet application experiments looked at a range of bandwidths (from 56 Kbits/second to 3 Mbits/second) and latencies (from 10 ms. to 200 ms.) which cover the range of network conditions on the Internet.

The application server used in our experiments with application startup latency was a Pentium Pro 200 system running Windows NT 4.0 service pack 3 with 64 MB of memory. Unfortunately, we could not control the bandwidth or latency to the application server on NT so our measurements for desktop applications only involve communication on a single shared 10 Mbit Ethernet link.

We used Etch [Romer et al. 97,Lee et al. 98], a binary instrumentation and rewriting engine, to profile and rewrite the applications used in this study. For all our experiments, we profile and reorder only the main application binary. For our prototype implementation, we simulate having an augmented page fault handler using the Windows NT debugger API [Microsoft 98]. The web browser is run in the context of a custom debugger.

In this section, we present the results of our experiments optimizing the startup latency. Section 4.1 describes the results for web applications, and Section 4.2 describes the results for desktop applications.

In this subsection, we show the performance of our optimization on web applications. We first present a performance model describing the startup latency of web applications and show how our optimization improves startup latency. We then compare four different schemes for starting web applications.

Equation 1 is a simple model for predicting the startup latency of web applications:

where:

Equation 1 suggests that we can improve startup time by reducing the number of bytes transferred and transfer all the needed bytes in a single requests. Unfortunately, we cannot predict with perfect accuracy the actual bytes that the application would use. Different approaches thus have to strike a balance between including as little as possible into the initially downloaded package and paying the cost of extra requests.

Obviously, an approach would be faster than another if it made fewer requests and transferred fewer bytes. However, if an ``improved'' approach reduces the number of bytes transferred at the cost of more requests then it would be faster than the original approach, if and only if:

Equation 3 implies that the performance of one approach relative to another is closely related to the bandwidth-latency product. An improved approach may be faster when the bandwidth-latency product is small but the same approach might actually be slower when the bandwidth-latency product is large.

We compare the performance of four approaches to starting web applications. These approaches examine the performance of demand paging and reordering, and probe the space of trade-offs between (1) eager approaches which download more bytes in a few requests, and (2) lazy approaches which download less bytes in many requests.

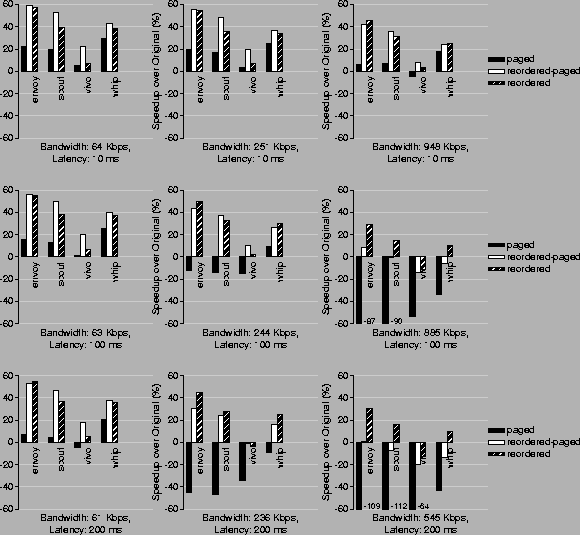

We implemented prototypes of each of these approaches. Each prototype reorders, pages, and downloads only the main application binary and not the libraries that the binaries depend upon. Figure 3 shows the results of our experiment starting applications using the different approaches and network conditions. 3 The figure shows the improvement in startup latency for a workload that is different from the profiled workload used to reorder the binary (c.f., Section 2.2).

|

We highlight a few trends from the figure:

The figure also shows a case where our methods are not very effective. For most cases with vivo, reordered and reordered-paged do not do significantly better than original. Since vivo is already fairly well compacted (84% of the binary is used, c.f., Table 2), we are hard pressed to make the binary more efficient.

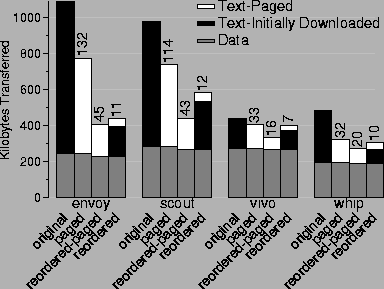

Figure 4 shows the breakdown of the bytes downloaded during the startup of the different applications. Paged downloads fewer bytes than original but generates a fair number of server requests. As shown in Figure 3, this does not work well in the presence of high latency. As expected, reordered-paged downloads the least number of bytes and has significantly fewer server requests compared to paged. This explains why reordered-paged is always better than paged.

|

Reordered reduces the number of server requests further but transfers more bytes. The figure shows that reordered still experiences some faults and downloads more bytes than reordered-paged. The reason is three-fold. First, the measured workload is different from the profiled workload. We expect that some of the code used in one run would not be used in the other. Second, we profiled the entire program run, rather than just startup, to avoid faults even in the middle of the program. Hence, we initially download code that may not be used immediately. This is the case for reordered in scout. Finally, our profile does not identify the use of data embedded in the code section. This may cause faults on access to code pages containing data.

Reducing the number of bytes transferred has benefits other than reducing the startup latency of web applications. [Banga & Druschel 99,Bradford & Crovella 98] showed that having a large number of open connections on a web server can cause serious performance degradation. By reducing the amount of work that the server has to perform, our techniques can reduce the duration of each connection hence reducing the load on servers serving up these Web applications.

In this subsection, we examine the effect of reordering on the startup latency of desktop applications. We consider the effects of reordering on four different states of the O/S file cache:

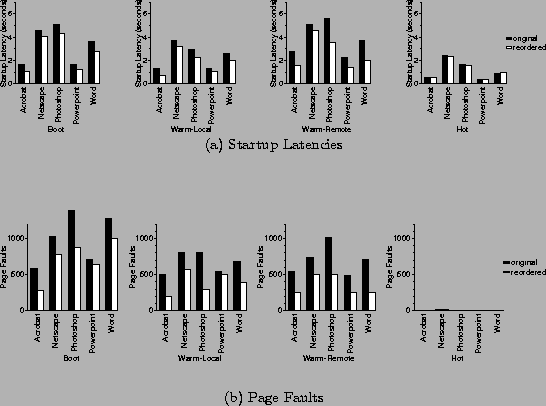

We ran the original and reordered application under these four scenarios. To filter out spurious results, we performed each of our measurements at least ten times. We dropped the runs with the highest and lowest times, and report the average of the other runs. Figure 5 shows the results of these experiments.

|

For all applications and configurations, reordering improves application startup latency. The two warm scenarios show that reordering the binary can significantly reduce startup time. It is especially effective for photoshop and word as they improve their application startup time by almost 2 seconds each. Page fault rates decrease a corresponding amount for the cases where we improve the application startup latency. This verifies that a dominant cost during startup are page-faults.

Since we only reordered the main application binary, we expect to mildly improve the boot case. The experiment shows that this is not the case. Many of the shared libraries are loaded into the file buffer cache during boot time (as shown by the small difference between the boot and the warm cases). Hence, reordering just the application binary time shows noticeable improvement even in the boot case.

We also included the hot case to see if reordering procedures in first-touch order would slow down this case. The figure shows that for the hot case, reordering only mildly affects the application startup time, all the differences were less than a tenth of a second.

<440>>

Our system is complementary to several work in improving program startup latency for web applications and traditional applications. For web applications, [Krintz et al. 98] describe a system for improving the startup latency and execution time of Java applications by overlapping the execution of code with download. Their approach does not not reduce the amount of code downloaded from the server but requires only a single server access. [Enst et al. 97] and [Franz & Kistler 97] describe techniques for code compression. Our technique is orthogonal and may be improved using compression.

For traditional applications, [Melanson 98] describes a technique for improving startup latency by reordering pages on disk and placing frequently used pages in the fast part of the disk. Our approach better packs each page allowing fewer pages to be used.

Our work builds upon years of work in code reordering. [Pettis & Hansen 90] is the classic in the field. [Chen & Leupen 97] describe a system for ``just-in-time'' code layout. Their system dynamically lays out procedures in a binary in application memory as they are needed by the program. Our system performs code layout off-line and use the paging system to load in the needed pages. We expect their system to achieve better code density but require more accesses to the disk (or network).

We have implemented a system that uses code reordering and demand paging to improve the startup latency of web and desktop applications. Our measurements on a prototype implementation show that the combination of these optimizations can improve program startup latency of web applications by as much as 58% and desktop applications by as much as 45%.

For web applications, the approaches to improving startup time have to carefully balance the competing requirements of downloading as few bytes as possible and accessing the server the least number of times. This balance is especially important for networks with a high latency-bandwidth product.

This graph shows the startup latency from all our experiments with web applications under various network conditions.

This document was generated using the LaTeX2HTML translator Version 98.1p1 release (March 2nd, 1998)

Copyright © 1993, 1994, 1995, 1996, 1997, Nikos Drakos, Computer Based Learning Unit, University of Leeds.

The command line arguments were:

latex2html -split 0 paper3.

The translation was initiated by Dennis Lee on 1999-06-01

|

This paper was originally published in the

Proceedings of the 3rd USENIX Windows NT Symposium,

July 12-15, 1999, Seattle, Washington, USA

Last changed: 27 Feb 2002 ml |

|